Introduction: From Manual Moderation to Intelligent Automation

In the early days of the internet, content moderation was a largely manual task. Small teams of human reviewers scanned forums, comment sections, and social platforms, removing harmful or inappropriate posts one by one. That approach worked when online communities were small. Today, with billions of users generating text, images, and videos every minute, manual moderation alone is no longer realistic.

This explosion of user-generated content has created serious challenges-misinformation, hate speech, cyberbullying, spam, and explicit material spread faster than ever. At the same time, platforms face growing pressure to protect users, advertisers, and brand reputation while respecting free expression. This is where AI in content moderation has emerged as a critical solution. By combining machine learning, natural language processing (NLP), and computer vision, AI-powered moderation systems aim to keep online spaces safer, cleaner, and more scalable than ever before.

What Is AI in Content Moderation?

AI in content moderation refers to the use of artificial intelligence technologies to automatically analyze, classify, and manage user-generated content across digital platforms. These systems are designed to detect content that violates community guidelines or legal standards, often in real time.

Key Technologies Behind AI Moderation

AI-based moderation systems rely on several core technologies working together:

- Machine Learning (ML): Learns patterns from large datasets of previously moderated content.

- Natural Language Processing (NLP): Understands context, sentiment, and intent in text-based content.

- Computer Vision: Analyzes images and videos to identify nudity, violence, or unsafe visuals.

- Speech Recognition: Converts audio into text for moderation in podcasts, videos, and voice chats.

Together, these technologies enable automated content moderation at a scale humans simply cannot match.

Why Content Moderation Needs AI Today

Modern platforms operate at massive scale. Millions of posts per hour make manual review slow, expensive, and inconsistent. AI helps bridge this gap.

Core Challenges AI Addresses

- Volume: Billions of posts across social media, forums, and marketplaces

- Speed: Harmful content can go viral within minutes

- Cost: Large human moderation teams are expensive to maintain

- Consistency: Human decisions can vary due to fatigue or bias

AI doesn’t replace human moderators entirely, but it acts as a powerful first line of defense.

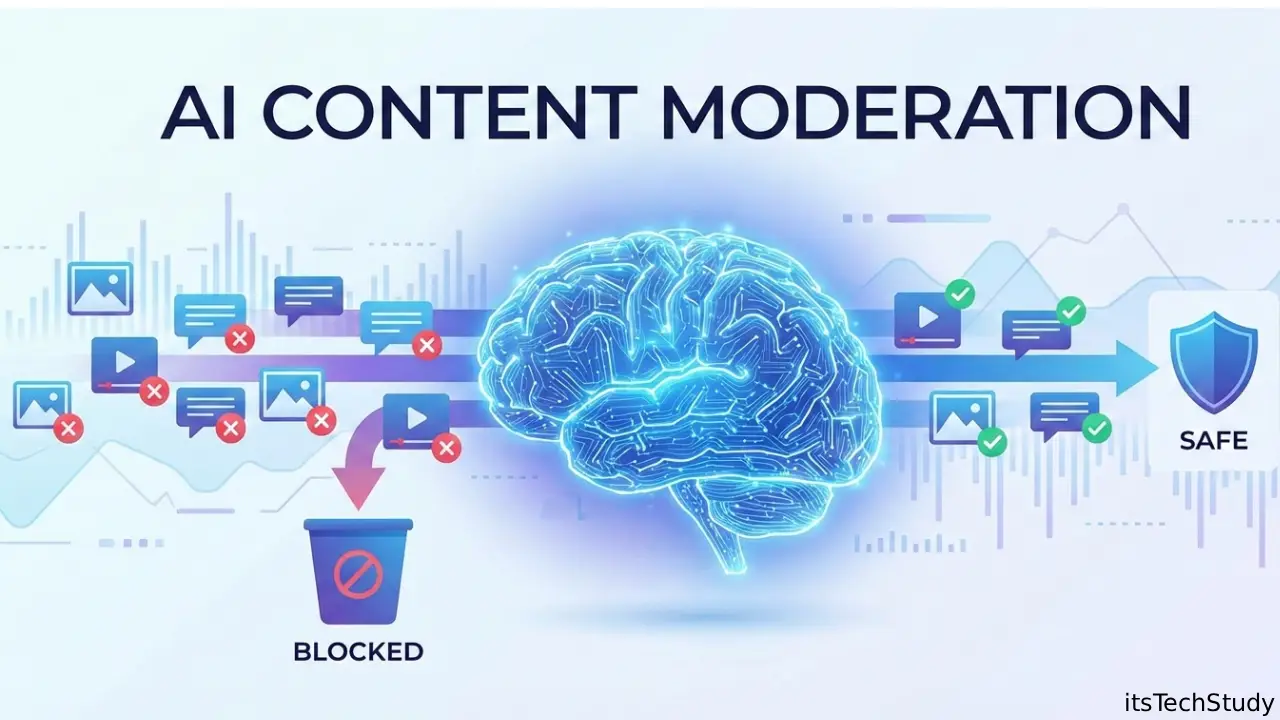

How AI Content Moderation Works in Practice

AI moderation systems typically follow a structured workflow that blends automation with human oversight.

Step-by-Step Moderation Process

- Content Ingestion: User-generated text, images, video, or audio is uploaded.

- AI Analysis: Algorithms scan the content using trained models.

- Risk Scoring: Content is assigned a probability score for policy violation.

- Automated Action: Low-risk or high-confidence cases are approved or removed automatically.

- Human Review: Edge cases are escalated to human moderators for final decisions.

This hybrid approach balances efficiency with accuracy.

AI vs Human Moderation: A Practical Comparison

| Aspect | AI Content Moderation | Human Moderation |

|---|---|---|

| Speed | Near real-time | Slower, manual |

| Scalability | Handles millions of posts | Limited by team size |

| Consistency | Rule-based, uniform | Subjective, varies |

| Context Understanding | Improving, but limited | Strong contextual judgment |

| Cost | Lower long-term cost | High operational cost |

This comparison shows why most platforms now rely on AI-first moderation supported by human expertise.

Pros and Cons of AI in Content Moderation

Advantages of AI Content Moderation

- Scalability: Handles massive volumes of content effortlessly

- Speed: Detects harmful content before it spreads

- Cost Efficiency: Reduces long-term moderation expenses

- 24/7 Operation: No fatigue or downtime

Limitations and Challenges

- Context Errors: Sarcasm, humor, or cultural nuance can be misread

- Bias Risks: Models may reflect biases in training data

- False Positives: Legitimate content may be incorrectly flagged

- Transparency Issues: Users may not understand why content was removed

Understanding these pros and cons helps platforms design more responsible moderation systems.

Real-World Use Cases of AI Content Moderation

AI moderation is already widely used across industries:

- Social Media Platforms: Detecting hate speech, misinformation, and harassment

- Online Marketplaces: Removing fake listings, scams, and prohibited products

- Gaming Communities: Monitoring chat toxicity and abusive behavior

- Media Platforms: Filtering explicit images and copyrighted content

As AI models mature, these use cases continue to expand.

Best Practices for Implementing AI Moderation Systems

For platforms considering AI-driven moderation, a thoughtful approach is essential.

Key Implementation Tips

- Combine AI automation with human review for balanced decisions

- Regularly audit and retrain models to reduce bias

- Clearly communicate community guidelines to users

- Offer appeal mechanisms for disputed moderation decisions

Responsible deployment builds trust with users while maintaining safety.

The Future of AI in Content Moderation

The future of AI in content moderation lies in smarter, more transparent systems. Advances in explainable AI, multimodal models, and real-time learning will improve accuracy and trust. Instead of simply removing content, future systems may focus more on prevention, education, and healthier online interactions.

Conclusion: A Smarter Path to Safer Digital Spaces

AI in content moderation has become essential for managing today’s vast digital ecosystems. By combining speed, scalability, and improving intelligence, AI helps platforms protect users while supporting growth. When paired with human judgment and ethical design, AI-driven moderation offers a practical, forward-looking solution for building safer and more responsible online communities.

Frequently Asked Questions (FAQ)

Q1: Is AI content moderation completely automated?

Ans: No. Most platforms use a hybrid approach where AI handles high-volume tasks and humans review complex or borderline cases for better accuracy.

Q2: Can AI understand context and sarcasm?

Ans: AI has improved significantly with NLP, but understanding sarcasm, cultural references, and nuanced language remains a challenge in some cases.

Q3: Is AI moderation biased?

Ans: AI can reflect biases present in training data. Regular audits, diverse datasets, and human oversight help reduce this risk.

Q4: Does AI moderation violate free speech?

Ans: AI moderation enforces platform-specific rules, not opinions. Transparent policies and appeal systems help balance safety and expression.

Q5: Is AI content moderation expensive to implement?

Ans: Initial setup can be costly, but over time it is often more cost-effective than maintaining large manual moderation teams.

No Comments Yet

Be the first to share your thoughts.

Leave a Comment