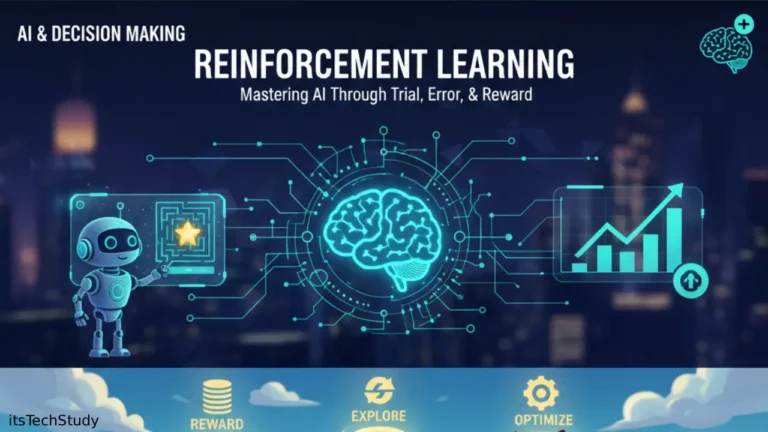

Introduction: Why Traditional Reinforcement Learning Hit a Wall

For years, reinforcement learning (RL) followed a fairly simple promise: let an agent interact with an environment, reward good behavior, punish bad decisions, and eventually intelligence emerges. Early successes using Q-learning proved this idea could work-but only in small, well-defined environments.

As soon as problems became complex-think video games, robotics, or real-world decision systems-classic Q-learning started to collapse under its own weight. The reason was painfully clear: state spaces grew too large to store Q-values in tables. A simple game could require millions of states, making traditional approaches impractical.

This bottleneck forced researchers to ask a critical question:

What if neural networks could approximate Q-values instead of storing them explicitly?

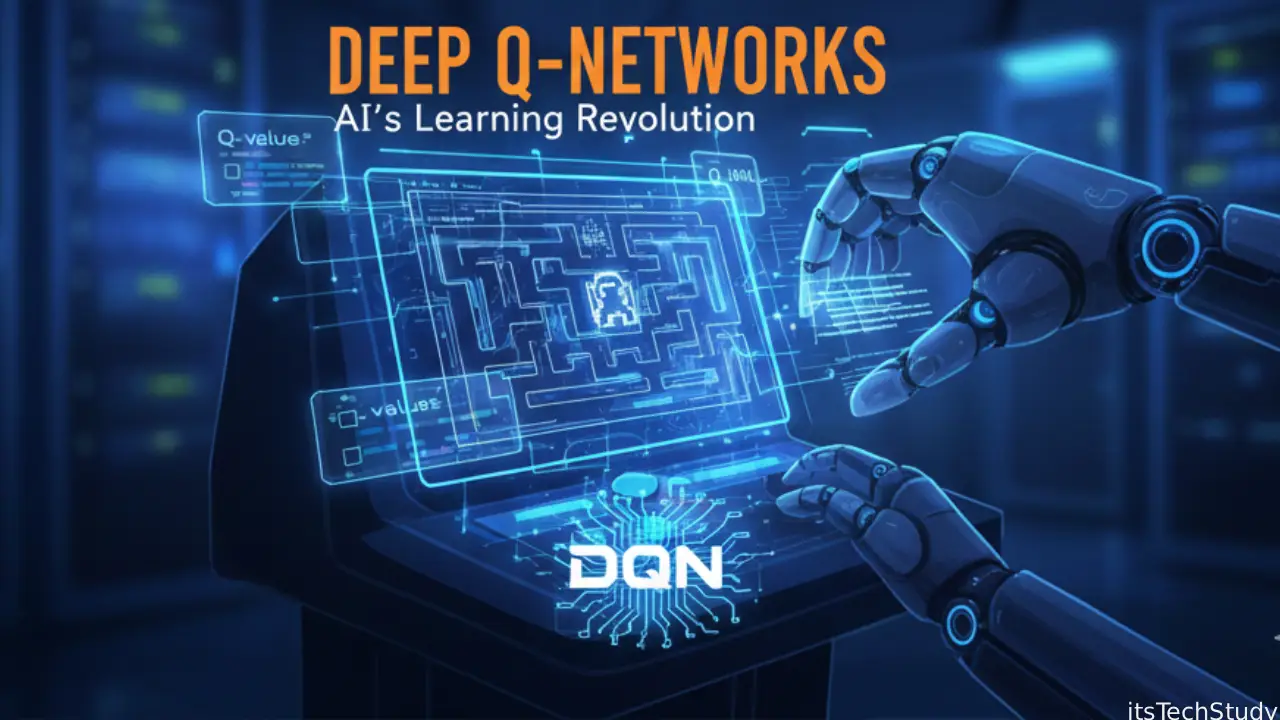

That question led to one of the most important breakthroughs in modern AI: Deep Q-Networks (DQN). By combining deep learning with reinforcement learning, DQN unlocked the ability for machines to learn directly from raw, high-dimensional data-forever changing the trajectory of artificial intelligence.

What Is a Deep Q-Network (DQN)?

A Deep Q-Network (DQN) is an advanced reinforcement learning algorithm that uses a deep neural network to approximate the Q-value function. Instead of relying on a lookup table, DQN learns a function that maps states and actions to expected future rewards.

At its core, DQN answers one fundamental question:

Given the current state, which action should the agent take to maximize long-term reward?

Key Components of DQN

DQN blends concepts from both reinforcement learning and deep learning:

- Agent – The decision-maker (AI model)

- Environment – The world the agent interacts with

- State – The current situation observed by the agent

- Action – A choice the agent can make

- Reward – Feedback received after an action

- Q-function – Estimates the value of taking an action in a state

What makes DQN special is its ability to generalize across unseen states, something classic Q-learning simply cannot do.

Why Deep Q-Networks Were a Game-Changer

The real breakthrough moment for DQN came when researchers demonstrated that an AI could learn to play Atari games directly from raw pixel input-no handcrafted rules, no domain-specific tricks.

This achievement proved that DQN could:

- Handle high-dimensional input

- Learn end-to-end decision-making

- Adapt to complex, dynamic environments

Problems DQN Solved

- State space explosion in traditional Q-learning

- Manual feature engineering

- Limited scalability of tabular methods

In short, DQN made reinforcement learning practical for real-world problems.

How Deep Q-Networks Work (Step-by-Step)

1. Neural Network as a Q-Function Approximator

Instead of storing Q-values, DQN uses a neural network that takes a state as input and outputs Q-values for all possible actions.

2. Experience Replay

To stabilize learning, DQN stores past experiences in a replay buffer. During training, random samples are drawn from this buffer, which:

- Breaks correlation between sequential experiences

- Improves data efficiency

- Reduces variance during updates

3. Target Network for Stability

DQN introduces a target network, a delayed copy of the main network, to compute stable target Q-values. This simple idea dramatically reduces training instability.

Core DQN Architecture Explained

Online Network vs Target Network

- Online Network – Learns and updates weights continuously

- Target Network – Updates periodically to provide stable learning targets

This dual-network setup is one of the most critical innovations behind DQN’s success.

Deep Q-Network Training Process

- Observe the current state

- Choose an action using an ε-greedy strategy

- Receive reward and next state

- Store experience in replay memory

- Sample random mini-batch

- Update neural network using loss minimization

- Periodically update target network

Key Enhancements to Basic DQN

Over time, researchers introduced improvements to fix DQN’s weaknesses:

- Double DQN – Reduces overestimation bias

- Dueling DQN – Separates value and advantage estimation

- Prioritized Experience Replay – Focuses on more informative experiences

DQN vs Traditional Q-Learning

| Feature | Q-Learning | Deep Q-Network (DQN) |

|---|---|---|

| State Representation | Tabular | Neural Network |

| Scalability | Low | High |

| Handles Raw Input | No | Yes |

| Memory Requirement | High | Efficient |

| Real-World Usability | Limited | Practical |

Pros and Cons of Deep Q-Networks

Pros

- Scales to complex environments

- Learns directly from raw data

- Eliminates manual feature engineering

- Proven success in games and simulations

Cons

- Training can be unstable

- Requires large computational resources

- Sensitive to hyperparameters

- Not ideal for continuous action spaces

Real-World Applications of DQN

Gaming and Simulations

- Atari games

- Strategy simulations

- Competitive AI agents

Robotics

- Path planning

- Object manipulation

- Control systems

Business and Technology

- Recommendation systems

- Resource allocation

- Automated decision-making systems

Conclusion: Why DQN Still Matters Today

Deep Q-Networks marked a turning point in artificial intelligence, proving that deep learning and reinforcement learning are far more powerful together than apart. While newer algorithms continue to evolve, DQN remains a foundational concept every AI enthusiast should understand.

If you’re exploring game AI, robotics, or intelligent decision systems, mastering DQN isn’t just useful-it’s essential. As reinforcement learning continues to shape the future of automation, DQN stands as the algorithm that opened the door.

Frequently Asked Questions (FAQ)

Q1: What problem does DQN solve in reinforcement learning?

Ans: DQN solves the limitation of traditional Q-learning by handling large and complex state spaces using neural networks instead of tables.

Q2: Is DQN suitable for beginners in reinforcement learning?

Ans: Yes, but it’s recommended to understand basic Q-learning concepts before diving into DQN implementations.

Q3: Why does DQN use experience replay?

Ans: Experience replay stabilizes learning by breaking correlations between consecutive experiences and improving sample efficiency.

Q4: What is the role of the target network?

Ans: The target network provides stable Q-value targets, preventing rapid oscillations during training.

Q5: Can DQN handle continuous action spaces?

Ans: No, DQN is best suited for discrete action spaces. Other algorithms like DDPG are used for continuous actions.

No Comments Yet

Be the first to share your thoughts.

Leave a Comment