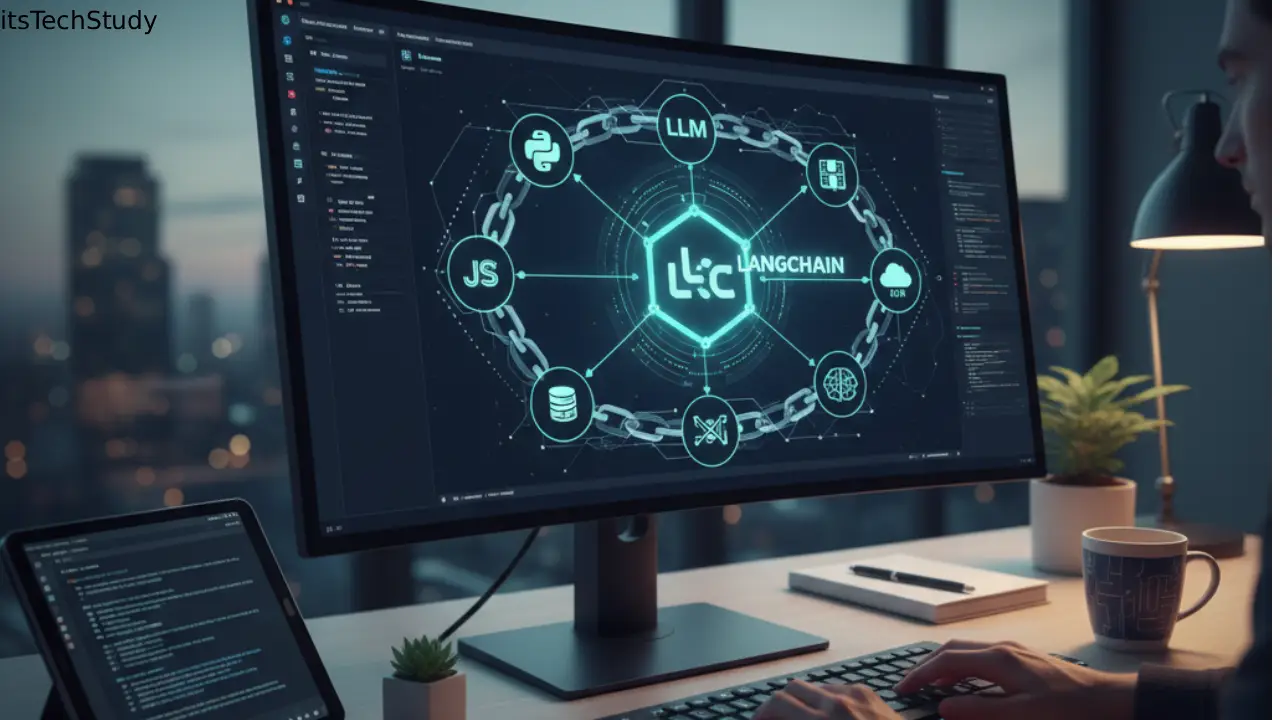

Introduction: The Rise of AI Frameworks and the LangChain Revolution

Artificial Intelligence (AI) has evolved from a futuristic dream into an essential part of daily life. From intelligent chatbots and virtual assistants to content generators and code interpreters, AI systems now power everything from healthcare to entertainment. However, developing advanced AI applications is not as simple as connecting to an API – it requires orchestrating models, managing data flows, and integrating various components seamlessly.

That’s where LangChain steps in.

LangChain is an open-source framework designed to simplify the process of building LLM-powered applications (Large Language Model applications). It acts as a bridge between AI models like OpenAI’s GPT-4, Anthropic Claude, or Cohere, and the tools, data, or APIs that developers use to bring those models to life.

But here’s the challenge – while AI models can generate text, summarize information, or answer questions, they struggle to handle real-world context, memory, and workflow automation. Developers often face hurdles in chaining together these models and tools effectively.

LangChain solves this exact problem by allowing developers to chain multiple AI components into a unified, intelligent system. Whether you’re building a smart chatbot, AI-powered research assistant, or automated content generator, LangChain provides the architecture and tools to make it happen.

In this article, we’ll break down how to use LangChain to build AI applications from scratch, explore its ecosystem, and guide you through best practices, real examples, and practical tips.

What Is LangChain and Why Does It Matter?

LangChain is a modular framework built specifically for LLM orchestration – meaning it helps developers connect different AI components in a structured, scalable, and efficient way.

It provides the following capabilities:

- Prompt management: Handle prompt templates dynamically.

- Memory: Maintain context and continuity across interactions.

- Chains: Connect multiple AI operations or steps logically.

- Agents: Enable decision-making and tool usage by AI models.

- Retrievers: Allow models to access and use external data sources.

Why LangChain is a Game-Changer

| Feature | Description | Example Use Case |

|---|---|---|

| Prompt Templates | Reusable prompt structures with placeholders for user input | Creating dynamic chatbot conversations |

| Chains | Sequential or conditional steps of AI logic | Multi-step Q&A systems |

| Agents | Models that make decisions and call tools | Smart assistants accessing APIs |

| Memory | Stores past interactions for contextual awareness | Conversational bots remembering user preferences |

| Retrievers | Fetch external data (like PDFs, databases) | AI knowledge assistants |

With these components, developers can move beyond static AI responses and build interactive, contextual, and dynamic systems that mimic human reasoning.

Setting Up LangChain: Step-by-Step Guide

1. Install the Required Tools

Before you begin, ensure you have Python 3.9+ installed. Then, install LangChain and a language model API (like OpenAI):

pip install langchain openai

You may also want to install additional libraries for storage or retrieval (like FAISS or Chroma):

pip install chromadb faiss-cpu

2. Get an API Key

If you’re using OpenAI, create an account and get your API key from:

https://platform.openai.com/account/api-keys

Store it safely using environment variables:

export OPENAI_API_KEY="your_api_key_here"

3. Create Your First LangChain App

Let’s create a simple text summarizer using LangChain.

from langchain.llms import OpenAI

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

# Define the prompt

template = "Summarize the following text in one paragraph:\n{text}"

prompt = PromptTemplate(input_variables=["text"], template=template)

# Initialize model and chain

llm = OpenAI(temperature=0.7)

chain = LLMChain(llm=llm, prompt=prompt)

# Run the chain

summary = chain.run("LangChain helps developers build LLM-powered applications easily.")

print(summary)

What this does:

- Defines a prompt template

- Connects it to an LLM

- Executes a chain that summarizes text

Core Concepts of LangChain Explained

1. Prompt Templates

Prompts are the foundation of any AI application. LangChain allows developers to define flexible prompt templates that adapt dynamically to inputs, improving the consistency and reliability of model outputs.

2. Chains

Chains connect different steps of an AI workflow. You can combine multiple models or tasks in a sequence — for example:

- Step 1: Summarize text

- Step 2: Translate it

- Step 3: Extract insights

LangChain makes this orchestration simple with SequentialChain or SimpleChain.

3. Memory

Memory allows your AI application to remember past interactions. For instance, a customer support chatbot can recall a user’s name or previous issue — creating a more natural experience.

Example:

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory()

4. Agents and Tools

Agents allow AI models to act autonomously. They can decide when to search Google, access a database, or run a function – without explicit human instructions.

Practical Example: Building a Smart Research Assistant

Let’s imagine you’re building a research assistant that:

- Answers questions

- Fetches information from documents

- Summarizes and structures insights

Here’s how you can structure it with LangChain:

- Use a Retriever to connect to a document database (e.g., PDFs or web data).

- Set up an LLMChain to interpret queries.

- Add Memory to maintain context over a research session.

- Integrate Tools like web search or data APIs.

With just a few lines of code, you can create a professional-grade research tool that feels interactive and intelligent.

Pros and Cons of Using LangChain

| Pros | Cons |

|---|---|

| Highly modular and flexible | Can be complex for absolute beginners |

| Supports multiple LLMs (OpenAI, Anthropic, etc.) | Requires understanding of API integrations |

| Great for building contextual AI apps | May need optimization for large data |

| Large, active developer community | Frequent updates may break old versions |

| Excellent documentation and tutorials | Some advanced tools may require paid APIs |

Best Use Cases for LangChain

LangChain can power a wide variety of AI applications. Some popular use cases include:

- Chatbots and Conversational AI

- AI-Powered Search Engines

- Document Summarizers

- Knowledge Management Tools

- AI Tutors or Study Assistants

- Customer Support Automation

- Custom Content Generators

Each of these relies on LangChain’s core principles – connecting LLMs, data, and logic into one intelligent system.

Tips to Build Better AI Applications with LangChain

- Start Simple: Begin with a small prototype before scaling.

- Use Memory Wisely: Too much context can slow responses.

- Monitor API Costs: Optimize prompts to reduce token usage.

- Add Custom Tools: Extend functionality beyond text generation.

- Test and Iterate: Continuously refine prompt structures for accuracy.

- Use Vector Databases: Combine LangChain with Chroma or Pinecone for efficient retrieval.

Comparison Table: LangChain vs Alternatives

| Framework | Best For | Language Support | Integrations | Ease of Use |

|---|---|---|---|---|

| LangChain | LLM Orchestration | Python, JS | OpenAI, Chroma, Pinecone | ★★★★☆ |

| LlamaIndex (GPT Index) | Data Retrieval | Python | OpenAI, FAISS | ★★★☆☆ |

| Haystack | Search Pipelines | Python | Hugging Face, Elasticsearch | ★★★☆☆ |

| Promptify | Lightweight Prompting | Python | Limited | ★★☆☆☆ |

Verdict:

LangChain stands out for its flexibility, integration power, and large community support, making it ideal for building scalable AI apps from scratch.

Conclusion: The Future of AI Development with LangChain

LangChain is redefining how developers build AI applications. It abstracts away complexity while giving creators the flexibility to design custom workflows. Whether you’re creating a chatbot, automation tool, or intelligent research assistant, LangChain offers the foundation to make it smarter, faster, and more efficient.

As the AI ecosystem continues to grow, frameworks like LangChain will become essential for connecting large language models to real-world logic and data. If you’re serious about AI development, learning LangChain is not just a skill – it’s a superpower that unlocks the full potential of generative AI.

FAQs About LangChain

Q1: What is LangChain used for?

Ans: LangChain is used to build complex AI applications by chaining together large language models, prompts, and data sources. It helps developers create intelligent, context-aware systems.

Q2: Is LangChain free to use?

Ans: Yes, LangChain is open-source. However, it requires API keys from model providers like OpenAI, which may have usage costs.

Q3: Can I use LangChain without Python?

Ans: While Python is the main language, LangChain also supports JavaScript/TypeScript, making it accessible for web developers.

Q4: Does LangChain work offline?

Ans: LangChain itself can run offline, but most LLMs it connects to (like OpenAI) require internet access for API calls.

Q5: What are the system requirements for LangChain?

Ans: A standard system with Python 3.9+ and internet access is enough. For large-scale applications, cloud or GPU support is beneficial.

Q6: How does LangChain handle memory and context?

Ans: LangChain provides memory modules that store past interactions, enabling conversational AI applications that remember user history.

No Comments Yet

Be the first to share your thoughts.

Leave a Comment