Introduction

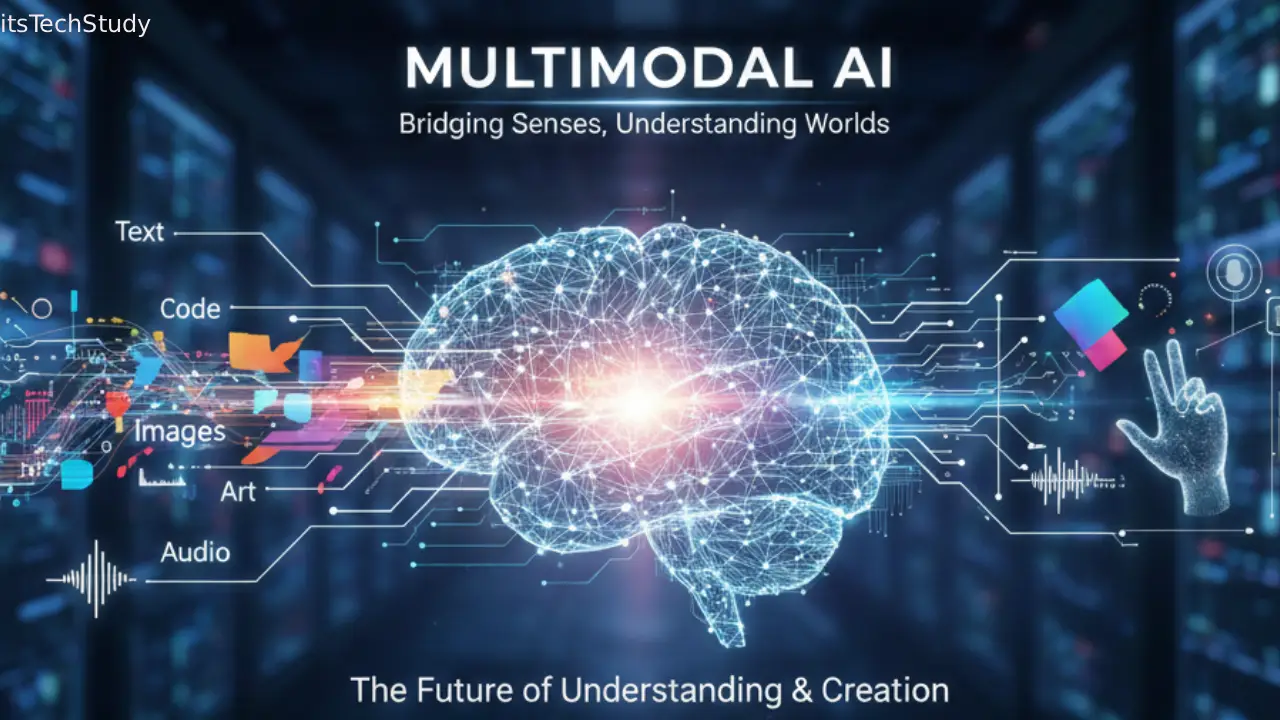

Technology has come a long way – from computers that could only process numbers to digital systems capable of understanding human language, emotions, and now even real-world sensory inputs. For decades, artificial intelligence was limited to single-mode operations, meaning it could only work with one type of data at a time, such as text, speech, or images. While these systems helped automate tasks and accelerate productivity, they faced a core limitation: real-life problems are never one-dimensional.

Today, the world is generating more complex data than ever – videos, audio recordings, photos, text messages, 3D scans, sensor data, social content, and more. Traditional AI struggles to interpret such diverse and interconnected data sources. This is where Multimodal AI enters the picture. It represents a new era of AI that can combine and interpret multiple forms of input simultaneously, just like the human brain.

Multimodal AI models can see, hear, speak, read, interpret, and understand context across sources — making them far more intelligent and adaptable than previous generations of AI. From self-driving cars and medical diagnostics to smart assistants and creative content generation, multimodal systems are redefining innovation and transforming industries.

In this comprehensive article, we will explore what Multimodal AI is, how it works, real-world applications, challenges, future trends, and why it is considered the most important breakthrough in artificial intelligence today.

What is Multimodal AI?

Multimodal AI refers to artificial intelligence systems capable of processing and understanding multiple types of data such as text, images, audio, video, and sensory signals simultaneously. Instead of relying on a single input channel, these models integrate different data sources to generate more accurate and meaningful outputs.

Example

If you show a traditional AI model a picture of a cat, it might identify it as “cat.”

A multimodal AI system can analyze the picture, read accompanying text, listen to audio, and conclude:

“This is a cat sitting on a sofa, and the sound indicates it is meowing.”

This deeper contextual understanding enables smart decision-making and natural interactions.

How Multimodal AI Works

Multimodal AI integrates several advanced technologies:

Key Components

- Deep Learning – Neural networks process and learn from large datasets.

- Computer Vision – Interprets visual data such as images and video.

- Natural Language Processing (NLP) – Understands and generates human language.

- Speech Recognition – Converts spoken words into text.

- Sensor Fusion – Combines data from sensors for robotics and automation.

- Generative AI Models – Create new content including images, text, audio, and 3D designs.

How Multimodal Models Combine Data

- Input Collection – Collects text, video, audio, images, etc.

- Feature Extraction – Converts each input into structured vector representations.

- Data Fusion – Merges different data formats into unified context.

- Processing & Decision – Uses neural networks to analyze and generate predictions.

- Output Generation – Produces responses or actions based on combined understanding.

Why Multimodal AI Matters: Current Challenges it Solves

| Previous AI Limitations | How Multimodal AI Solves Them |

|---|---|

| Single-data dependency | Integrates multiple sources for deeper understanding |

| Limited accuracy in real-world settings | Context-aware learning improves reliability |

| Difficulty handling complex tasks | Supports dynamic reasoning and real-time decisions |

| Poor communication ability with humans | Enables richer natural interaction |

| Not suitable for diverse domains | Adaptable for healthcare, automotive, education, media & more |

Multimodal AI bridges the gap between human perception and machine intelligence – enabling experiences that feel natural, intuitive, and intelligent.

Real-World Applications of Multimodal AI

Multimodal AI is rapidly transforming industries with practical and intelligent solutions.

1. Healthcare

- Diagnosing diseases using scans, lab reports, and patient history

- Medical chatbots analyzing symptoms via voice and text

- Real-time surgical assistance with visual recognition

2. Autonomous Vehicles

- Uses cameras, radar, lidar, and GPS

- Detects obstacles, signs, weather, and traffic patterns

- Enhances safety with real-time processing

3. Content Creation and Media

- AI that writes articles, creates images, composes music, and edits videos

- Real-time voice-to-animation conversion

- AI editors for storytelling, documentaries, and design

4. Education & Personalized Learning

- AI tutors analyzing voice, performance, and emotions

- Visual + audio learning assistance for students with disabilities

5. Customer Service & Virtual Assistants

- Assistants like ChatGPT, Gemini, Claude, and Copilot that understand text, voice, images, and documents

- Real-time troubleshooting using uploaded photos/videos

6. Security & Surveillance

- Identifies objects, activities, and abnormal behaviors

- Integrates audio and video for accurate monitoring

7. Retail & E-Commerce

- Visual product search (“search by photo”)

- Personalized recommendations based on behavior and context

Comparing Multimodal AI vs. Traditional AI

| Feature | Traditional AI | Multimodal AI |

|---|---|---|

| Input Type | Single data mode | Multiple data formats |

| Contextual Understanding | Low | High |

| Real-world performance | Limited | Advanced & accurate |

| Flexibility | Narrow | Broad and dynamic |

| Interaction Style | Text-only or voice-only | Natural human-like interaction |

| Use Cases | Simple tasks | Complex decision-based tasks |

Advantages and Disadvantages of Multimodal AI

Pros

- More accurate and intelligent decision-making

- Human-like interaction and understanding

- Works efficiently in complex real-world environments

- Enables new creative and analytical possibilities

- Reduces errors and improves automation

- Better personalization for users

Cons

- Requires huge datasets for training

- High development and operational cost

- Risk of privacy and misuse

- Complex integration with existing systems

- Computationally expensive and energy intensive

Who Benefits from Multimodal AI?

Best suited for:

- Software developers and AI researchers

- Businesses seeking automation and innovation

- Digital creators and media professionals

- Healthcare organizations and hospitals

- Educational institutions

- Automotive and robotics companies

Future job impact

Multimodal AI will create new career opportunities in:

- Machine learning engineering

- Computer vision development

- AI-powered content production

- Data modeling and training

- Robotics and autonomous systems

Future of Multimodal AI

The next decade will push AI beyond imagination. Key trends include:

Emerging Innovations

- Real-time multi-sensor robotics for home and industry

- Fully autonomous transportation

- Universal translators for global communication

- AI doctors, AI lawyers, and AI teachers

- AI-generated films, 3D designs, and virtual worlds

- Mixed reality experiences integrated with AI

- Neuro-AI systems bridging brain and machines

The long-term goal is to create Artificial General Intelligence (AGI) – machines that think, learn, and reason like humans. Multimodal AI is the foundation of that journey.

Conclusion

Multimodal AI is not just another technology trend – it is a revolutionary shift shaping the future of human-computer interaction. By integrating visual, verbal, and sensory data into a unified intelligence system, it brings machines closer to natural perception and understanding.

From autonomous vehicles to education, entertainment, healthcare, robotics, and everyday applications, multimodal AI is unlocking unprecedented possibilities. As the world moves deeper into digital transformation, embracing multimodal AI will be crucial for innovation, competitiveness, and progress.

The future is multimodal – and it is just beginning.

Frequently Asked Questions (FAQ)

Q1: How is multimodal AI different from traditional AI?

Ans: Traditional AI can only process one type of input, like text or images. Multimodal AI processes multiple formats such as video, audio, and text simultaneously to generate more accurate predictions and natural output.

Q2: Is multimodal AI safe to use?

Ans: Yes, when developed responsibly with strict privacy regulations. However, misuse and data security concerns still exist, so ethical development remains essential.

Q3: Which industries use multimodal AI the most?

Ans: Healthcare, autonomous vehicles, education, media production, e-commerce, defense, robotics, and smart assistants.

Q4: What skills are required to work with multimodal AI?

Ans: Knowledge of deep learning, NLP, computer vision, Python, data science, model training, and cloud platforms.

Q5: Will multimodal AI replace human jobs?

Ans: It will transform roles more than it replaces them. New AI-driven skill sets and collaborations will emerge, requiring humans to focus on creativity and strategy.

Q6: Can multimodal AI create content such as images and videos?

Ans: Yes. Generative multimodal models can create text, images, audio, animations, video edits, and 3D content.

No Comments Yet

Be the first to share your thoughts.

Leave a Comment