Introduction: Why Q-Learning Still Matters in Today’s AI Landscape

Artificial intelligence has come a long way from rule-based systems that followed rigid instructions. Today’s intelligent systems learn by interacting with their environment, making decisions, and improving from experience. This shift is largely driven by reinforcement learning, a branch of machine learning that mimics how humans and animals learn through trial and error.

However, reinforcement learning hasn’t always been easy to implement. Early approaches struggled with scalability, slow learning, and heavy dependence on human-designed rules. As environments became more complex-think robotics, autonomous vehicles, or real-time game strategies-the need for a more flexible and self-improving learning method became clear.

This is where Q-Learning emerged as a breakthrough.

Q-Learning is one of the most influential reinforcement learning algorithms ever developed. Despite being introduced decades ago, it remains highly relevant today, forming the foundation for modern techniques like Deep Q-Networks (DQN). Its simplicity, adaptability, and model-free nature make it a favorite for both beginners learning AI and professionals building intelligent systems.

In this article, we’ll explore what Q-Learning is, how it works, why it’s important, and where it’s used today, all in clear, conversational language-no unnecessary math overload, just practical understanding.

What Is Q-Learning?

Q-Learning is a model-free reinforcement learning algorithm that enables an agent to learn the best action to take in a given situation without needing prior knowledge of the environment.

At its core, Q-Learning is about learning how good an action is in a particular state. The “Q” stands for quality, representing the expected future rewards an agent can obtain by taking a certain action from a specific state.

Key Characteristics of Q-Learning

- Model-free: No need to understand environment dynamics in advance

- Off-policy: Learns the optimal policy regardless of the agent’s current behavior

- Iterative: Improves decisions over time through repeated interactions

- Goal-driven: Maximizes cumulative rewards, not just immediate gains

Because of these properties, Q-Learning is widely used in environments where rules are unclear, change frequently, or are too complex to model explicitly.

Core Concepts Behind Q-Learning

To truly understand Q-Learning, you need to grasp a few fundamental reinforcement learning concepts.

Agent and Environment

- Agent: The learner or decision-maker (e.g., a robot, game character, or software system)

- Environment: Everything the agent interacts with

The agent observes the environment, takes actions, and receives feedback in the form of rewards.

States, Actions, and Rewards

- State (S): The current situation of the agent

- Action (A): A choice the agent can make

- Reward (R): Feedback from the environment after an action

The agent’s objective is to maximize its total cumulative reward over time.

The Q-Value

A Q-value represents the expected future reward for taking action A in state S and then following the optimal strategy afterward.

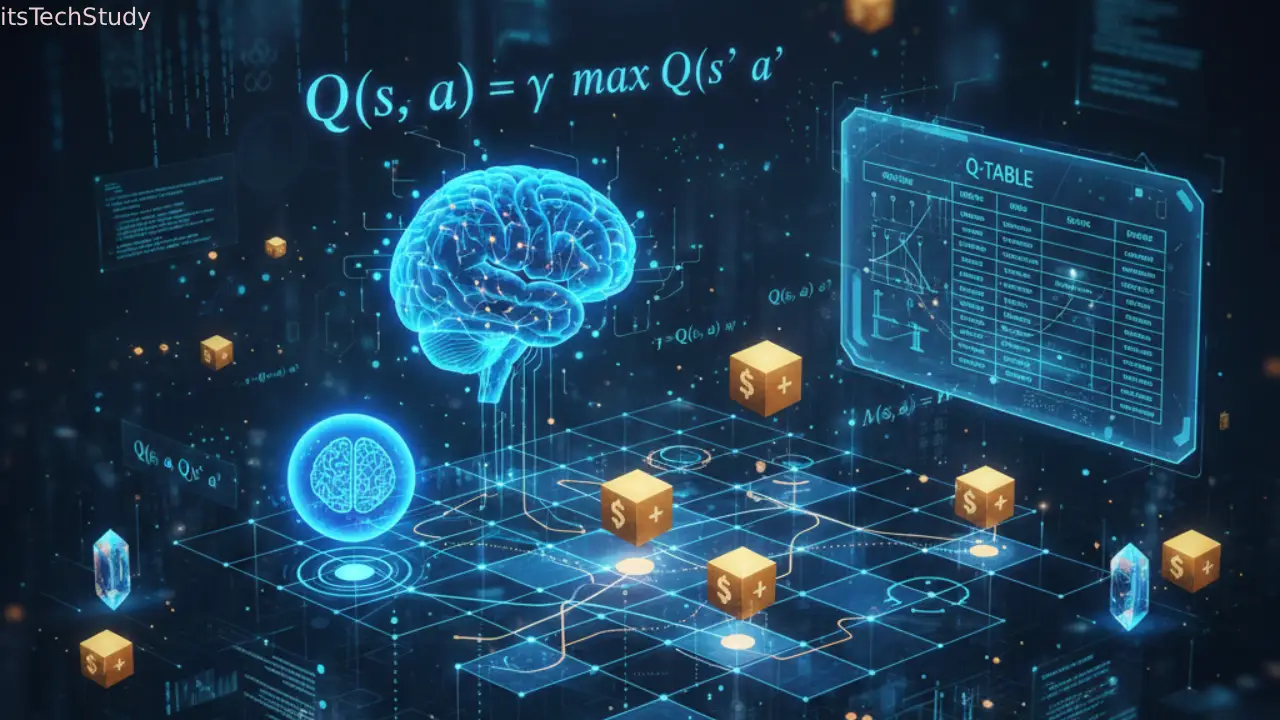

How Q-Learning Works Step by Step

Q-Learning learns by continuously updating a table of Q-values, commonly called the Q-table.

The Q-Learning Process

- Initialize the Q-table with arbitrary values (often zeros)

- Observe the current state

- Choose an action (exploration or exploitation)

- Perform the action

- Receive a reward and observe the next state

- Update the Q-value

- Repeat until learning converges

The Q-Learning Update Rule

The heart of Q-Learning lies in this update equation:

Q(s, a) = Q(s, a) + α [ r + γ max Q(s', a') − Q(s, a) ]

Where:

- α (alpha) = learning rate

- γ (gamma) = discount factor

- r = reward

- s’ = next state

This formula gradually adjusts Q-values toward better decisions based on experience.

Exploration vs Exploitation in Q-Learning

One of the biggest challenges in reinforcement learning is balancing exploration and exploitation.

Exploration

- Trying new actions

- Helps discover better strategies

- Prevents getting stuck in suboptimal behavior

Exploitation

- Using known information

- Maximizes immediate rewards

- Can miss better long-term solutions

Epsilon-Greedy Strategy

A common solution is the epsilon-greedy approach:

- With probability ε, choose a random action

- With probability (1 − ε), choose the best known action

Over time, ε is reduced so the agent gradually focuses on optimal behavior.

Q-Learning vs Other Reinforcement Learning Algorithms

| Feature | Q-Learning | SARSA | Policy Gradient |

|---|---|---|---|

| Learning Type | Off-policy | On-policy | Policy-based |

| Environment Model | Not required | Not required | Not required |

| Stability | High | Medium | High |

| Scalability | Limited with tables | Limited | Better with deep learning |

| Ease of Implementation | Easy | Easy | Moderate |

This comparison highlights why Q-Learning is often the starting point for reinforcement learning practitioners.

Advantages of Q-Learning

Pros of Q-Learning

- Simple and intuitive algorithm

- Does not require environment modeling

- Guaranteed convergence under proper conditions

- Works well for small to medium state spaces

- Foundation for advanced deep reinforcement learning

These strengths make Q-Learning ideal for educational purposes and controlled environments.

Limitations and Challenges of Q-Learning

Cons of Q-Learning

- Poor scalability for large state spaces

- High memory usage due to Q-tables

- Slower convergence in complex environments

- Not suitable for continuous state/action spaces without approximation

To overcome these limitations, researchers extended Q-Learning into Deep Q-Learning, where neural networks replace Q-tables.

Real-World Applications of Q-Learning

Despite its simplicity, Q-Learning has been applied in many practical domains.

Common Use Cases

- Game AI: Learning optimal strategies in board games and simulations

- Robotics: Navigation and movement optimization

- Traffic signal control: Reducing congestion dynamically

- Recommendation systems: Adaptive content delivery

- Resource management: Optimizing system performance

Its ability to learn without predefined rules makes it extremely versatile.

Best Practices for Implementing Q-Learning

To get the most out of Q-Learning, follow these proven tips:

- Tune learning rate (α) carefully

- Use an appropriate discount factor (γ)

- Gradually reduce exploration rate

- Normalize rewards where possible

- Monitor convergence trends

Small adjustments can significantly improve learning efficiency.

Conclusion: Why Q-Learning Is Still Worth Learning

Q-Learning may be one of the earliest reinforcement learning algorithms, but its impact is timeless. Its elegant approach to learning through experience laid the groundwork for today’s most advanced AI systems. Whether you’re a beginner exploring machine learning or a developer building intelligent applications, understanding Q-Learning provides invaluable insight into how machines learn to make decisions.

As AI systems grow more autonomous, the principles behind Q-Learning will continue to shape how intelligent agents interact with the world. Learning it today means being better prepared for the future of artificial intelligence.

Frequently Asked Questions (FAQ)

Q1: What is Q-Learning in simple terms?

Ans: Q-Learning is a reinforcement learning algorithm that teaches an agent how to act optimally by learning from rewards and penalties through experience.

Q2: Is Q-Learning supervised or unsupervised?

Ans: Q-Learning is neither. It falls under reinforcement learning, where learning happens through interaction and feedback.

Q3: What is the difference between Q-Learning and Deep Q-Learning?

Ans: Q-Learning uses tables to store values, while Deep Q-Learning uses neural networks to handle large or continuous state spaces.

Q4: Does Q-Learning guarantee optimal results?

Ans: Yes, under certain conditions like sufficient exploration and proper learning rates, Q-Learning converges to the optimal policy.

Q5: Is Q-Learning still relevant today?

Ans: Absolutely. It remains a foundational algorithm and is widely used in modern AI research and applications.

No Comments Yet

Be the first to share your thoughts.

Leave a Comment